Sparse Regression Modelling

Lecture 6 - MATH11301

Outline

- Regression Recap

- Subset Selection

- Shrinkage Methods

- Dimension Reduction

1. Regression Recap

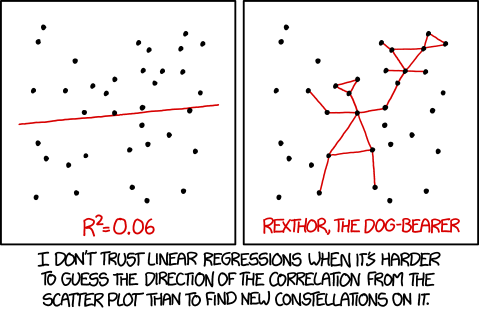

Simple Linear Regression

Given response \(y\) and single predictor \(x\), this model fits to \(y = \beta_{0} + \beta_{1}x\).

For \(n\) observations \((x^{(i)}, y^{(i)})\), the fit is found by minimizing the residual sum of squares:

\[\begin{equation} \min_{\beta_0,\beta_1} \left( \sum_{i=1}^n {\left({ y^{(i)} - \beta_0 - \beta_{1}x^{(i)} }\right)}^2 \right). \end{equation}\]

Multiple Linear Regression

Typically in real data, we have multiple predictors for a response variable.

Given \(n\) observations of

- response variable \(y\),

- vector of \(p\) predictors, \({\bf x} = (x_{1}, x_{2}, \ldots, x_{p})\),

the linear regression model is

\[ y = \beta_0 + \beta_1x_1 + \beta_2x_2 + \cdots + \beta_px_p + \varepsilon, \]

again, fit using least squares procedure:

\[\begin{equation} \min_{\beta_0, \beta_1, \ldots, \beta_p} \left(\sum_{i=1}^n {\left( y^{(i)} - \beta_0 - \sum_{j=1}^p\beta_{j}x_{j}^{(i)} \right)}^2 \right) =: \min_{{\bf\beta}}(\operatorname{RSS}). \end{equation}\]

What are the practical issues here?

\[ y = \beta_0 + \beta_1x_1 + \beta_2x_2 + \cdots + \beta_px_p \]

Example Linear Model

In IDS, we fit a linear model to the Lending Club’s loans dataset.

| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | 1.89 | 0.21 | 9.01 | < 0.001 |

| income_Source Verified | 1.00 | 0.10 | 10.06 | < 0.001 |

| income_Verified | 2.56 | 0.12 | 21.87 | < 0.001 |

| debt_to_income | 0.02 | 0.00 | 7.43 | < 0.001 |

| credit_util | 4.90 | 0.16 | 30.25 | < 0.001 |

| bankruptcy1 | 0.39 | 0.13 | 2.96 | 0.003 |

| term | 0.15 | 0.00 | 38.89 | < 0.001 |

| credit_checks | 0.23 | 0.02 | 12.52 | < 0.001 |

| issue_monthJan-2018 | 0.05 | 0.11 | 0.42 | 0.674 |

| issue_monthMar-2018 | -0.04 | 0.11 | -0.39 | 0.696 |

2. Subset Selection

Model Selection

We have a model that works with all the predictors, but could we do better?

“Of two competing theories, the simpler explanation is to be preferred.”

(Ockham’s Razor)

Recall, to compare models of differing size we can use a metric such as the Akaike information criterion, which for least squares is

\[\begin{equation} \operatorname{AIC} = \frac{1}{n}\left( {\operatorname{RSS} + 2d\hat{\sigma}^2} \right). \end{equation}\]

or the adjusted \(R^2\) value,

\[\begin{equation} \operatorname{Adjusted}~R^2 = 1 - \frac{\operatorname{RSS}/(n-d-1)}{\operatorname{TSS}/(n-1)}, \end{equation}\]

where \(\operatorname{TSS} = \sum_i {(y_i-\bar{y})}^2\) and \(d\) is the number of variables in the model.

Backward Selection

Starting with full model, successively remove predictors until the criterion can’t improve.

full_model <- lm(interest_rate~., data=loans_ids)

backward <- step(full_model, direction='backward')Start: AIC=29106.05

interest_rate ~ income_ + debt_to_income + credit_util + bankruptcy +

term + credit_checks + issue_month

Df Sum of Sq RSS AIC

- issue_month 2 13.3 184240 29103

<none> 184227 29106

- bankruptcy 1 161.6 184389 29113

- debt_to_income 1 1021.7 185249 29159

- credit_checks 1 2896.3 187123 29260

- income_ 2 8845.9 193073 29570

- credit_util 1 16918.2 201145 29980

- term 1 27962.5 212190 30514

Step: AIC=29102.77

interest_rate ~ income_ + debt_to_income + credit_util + bankruptcy +

term + credit_checks

Df Sum of Sq RSS AIC

<none> 184240 29103

- bankruptcy 1 162.1 184402 29110

- debt_to_income 1 1023.7 185264 29156

- credit_checks 1 2898.7 187139 29257

- income_ 2 8836.1 193076 29566

- credit_util 1 16921.3 201162 29977

- term 1 27964.1 212204 30510Forward Selection

Starting with the empty model, successively add predictors until criterion can’t improve.

empty_model <- lm(interest_rate~1, data=na.omit(loans_ids))

forward <- step(empty_model, direction='forward', scope = formula(full_model))Start: AIC=32096.14

interest_rate ~ 1

Df Sum of Sq RSS AIC

+ term 1 31980 217097 30728

+ credit_util 1 16104 232972 31431

+ income_ 2 15101 233976 31476

+ debt_to_income 1 5018 244059 31895

+ credit_checks 1 4297 244780 31925

+ bankruptcy 1 576 248501 32075

<none> 249077 32096

+ issue_month 2 25 249052 32099

Step: AIC=30727.54

interest_rate ~ term

Df Sum of Sq RSS AIC

+ credit_util 1 17987.8 199109 29867

+ income_ 2 10212.9 206884 30251

+ debt_to_income 1 3921.0 213176 30548

+ credit_checks 1 3635.1 213462 30561

+ bankruptcy 1 556.7 216540 30704

<none> 217097 30728

+ issue_month 2 6.3 217091 30731

Step: AIC=29866.88

interest_rate ~ term + credit_util

Df Sum of Sq RSS AIC

+ income_ 2 10562.8 188546 29327

+ credit_checks 1 4000.8 195109 29666

+ debt_to_income 1 2011.2 197098 29768

+ bankruptcy 1 332.0 198777 29852

<none> 199109 29867

+ issue_month 2 4.5 199105 29871

Step: AIC=29327.2

interest_rate ~ term + credit_util + income_

Df Sum of Sq RSS AIC

+ credit_checks 1 3119.02 185427 29163

+ debt_to_income 1 1114.30 187432 29270

+ bankruptcy 1 297.31 188249 29314

<none> 188546 29327

+ issue_month 2 18.80 188528 29330

Step: AIC=29162.83

interest_rate ~ term + credit_util + income_ + credit_checks

Df Sum of Sq RSS AIC

+ debt_to_income 1 1025.02 184402 29110

+ bankruptcy 1 163.43 185264 29156

<none> 185427 29163

+ issue_month 2 15.80 185412 29166

Step: AIC=29109.54

interest_rate ~ term + credit_util + income_ + credit_checks +

debt_to_income

Df Sum of Sq RSS AIC

+ bankruptcy 1 162.075 184240 29103

<none> 184402 29110

+ issue_month 2 13.712 184389 29113

Step: AIC=29102.77

interest_rate ~ term + credit_util + income_ + credit_checks +

debt_to_income + bankruptcy

Df Sum of Sq RSS AIC

<none> 184240 29103

+ issue_month 2 13.253 184227 29106Hybrid Approaches

Backward selection requires that \(n > p\) in order to yield a unique least-squares solution. Forward selection works with high-dimensional data when \(n < p\) (potentially \(n \ll p\)).

In practice, we often take combined approach: starting from the empty model, whenever a new variable is added, remove any now-obsolete variables.

The aim is to mimic the ‘benefits’ of an exhaustive search while retaining subset selection’s efficiency.

Importantly though, they do not guarantee optimality!

Both work well if \(p \ll d\), but neither is performant when we expect the model to use even a moderate number of variables.

3. Shrinkage Methods

Penalty Terms

Subset selection isn’t the only way improve models. Here’s a more optimum approach.

To penalise models whose coefficient vectors have become too ‘unruly’, we use a penalty

\[\begin{equation} P(\beta) = \lambda \|\beta\|, \end{equation}\]

where \(\|\beta\|\) is a vector-norm and \(\lambda\geq0\) is a tuning parameter.

Working from the least squares problem, \(\min_{{\bf\beta}}(\operatorname{RSS})\), we add this to the objective to give

\[\begin{equation} \min_{\beta} \left(\sum_{i=1}^n {\left( y^{(i)} - \beta_0 - \sum_{j=1}^p\beta_{j}x_{j}^{(i)} \right)}^2 + \lambda\|\beta\| \right), \end{equation}\]

Note: we can show the above is the Lagrange form of constraining least squares by some \({\|\beta\|} \leq t\).

The Ridge

In Ridge regression, we apply the \(\mathcal{l}_2\) norm, solving \(\min_{\beta} (RSS + \lambda\sum_{i=1}^p\beta_j^2 )\).

Note that \(\beta_i\neq0\) for most \(i\) and \(\lambda\) here.

The Lasso

With Lasso regression we apply the \(\mathcal{l}_1\) norm, solving \(\min_{\beta} (RSS + \lambda\sum_{i=1}^p|\beta_j^{\phantom{'}}| )\).

Note that \(\beta_i=0\) for some \(i\) and \(\lambda\) here.

What’s the key difference here?

\[ l_1~\text{vs.}~l_2 \]

Constraint Comparison

Larger Dataset

Unlike Ridge, Lasso performs variable selection. This creates sparse models.

Elastic Net

Could instead use a combined penalty term, \(P(\alpha,\beta) = \frac{1}{2}(1-\alpha){\|\beta\|}_2^2 + \alpha{\|\beta\|}_1\).

4. Dimension Reduction

Placeholder

mpg cyl disp hp

Min. :10.40 Min. :4.000 Min. : 71.1 Min. : 52.0

1st Qu.:15.43 1st Qu.:4.000 1st Qu.:120.8 1st Qu.: 96.5

Median :19.20 Median :6.000 Median :196.3 Median :123.0

Mean :20.09 Mean :6.188 Mean :230.7 Mean :146.7

3rd Qu.:22.80 3rd Qu.:8.000 3rd Qu.:326.0 3rd Qu.:180.0

Max. :33.90 Max. :8.000 Max. :472.0 Max. :335.0

drat wt qsec vs

Min. :2.760 Min. :1.513 Min. :14.50 Min. :0.0000

1st Qu.:3.080 1st Qu.:2.581 1st Qu.:16.89 1st Qu.:0.0000

Median :3.695 Median :3.325 Median :17.71 Median :0.0000

Mean :3.597 Mean :3.217 Mean :17.85 Mean :0.4375

3rd Qu.:3.920 3rd Qu.:3.610 3rd Qu.:18.90 3rd Qu.:1.0000

Max. :4.930 Max. :5.424 Max. :22.90 Max. :1.0000

am gear carb

Min. :0.0000 Min. :3.000 Min. :1.000

1st Qu.:0.0000 1st Qu.:3.000 1st Qu.:2.000

Median :0.0000 Median :4.000 Median :2.000

Mean :0.4062 Mean :3.688 Mean :2.812

3rd Qu.:1.0000 3rd Qu.:4.000 3rd Qu.:4.000

Max. :1.0000 Max. :5.000 Max. :8.000 End